Part 3

The prevalence and expansion of artificial intelligence is a subject that could take up volumes of blog posts, and it probably will in the future, but for now I want to wrap up this series with the point I had originally hoped to make in a single post. To summarize my thoughts to date, from what I have been able to uncover in my own research and experience: 1) humans are frequently enthralled by man vs. machine stories involving artificial intelligence that can match or surpass our own abilities; 2) however, while some of that technology is theoretically possible, it does not exist at present; 3) therefore, we must ask why we are so fixated on a hypothetical situation that isn’t grounded in reality.

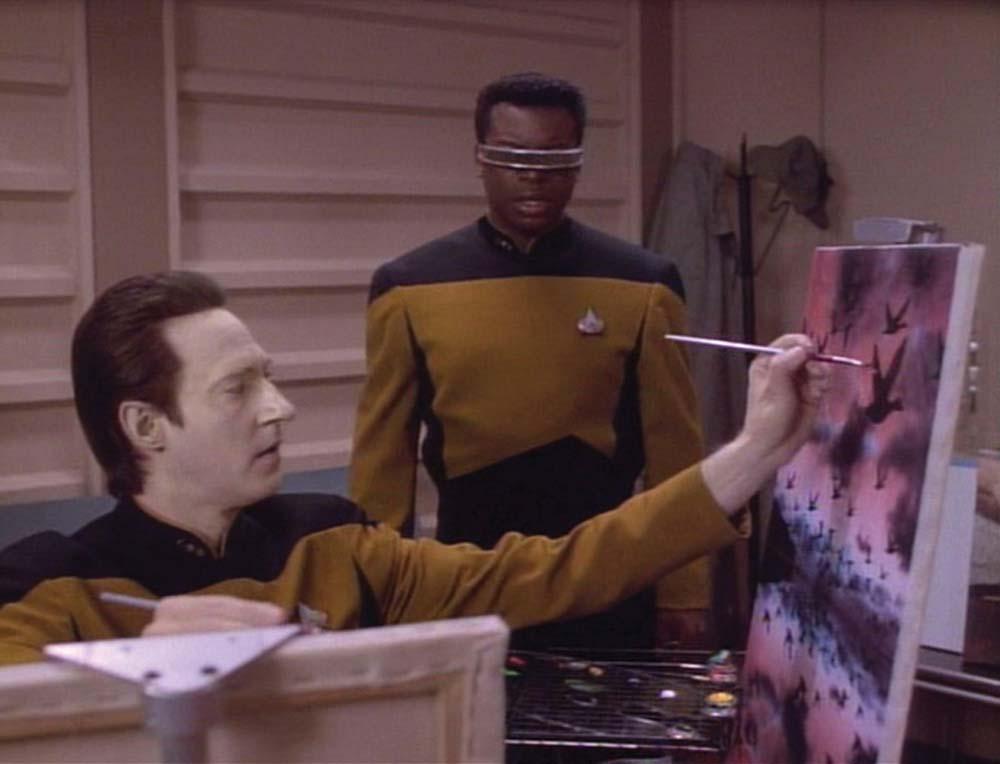

Image credit: [1]

A short and easy answer would be that it’s human nature to fixate on disaster situations, whether or not they’re realistic – but even though that’s true, this genre in particular is one that gets a disproportionately large amount of attention, given its limited possibility of unfolding. It’s also interesting to consider that many of these man vs. machine narratives include another layer – one that isn’t always explicit but that is absolutely realistic as part of our lives in present day. I am specifically talking about how AI is used by man – or, if we want to be more on-the-nose, by organizations that can make use of AI-based skills.

Behind the Curtain

It is interesting that many of the man vs. machine movies that we have come to know and love involve an antagonistic AI that is actually ultimately controlled by a human or human-run corporation in order to gain power of some kind. Diverting attention from those who are really in power to the “troublesome” AI perpetuates the idea of AI as the enemy (for the protagonists and/or the audience) when that may not actually be the case. Watching it happen in movies raises an interesting point that there could be a similar situation in the real world: humans who use AI for less than ethical purposes could absolutely benefit from a widespread perception that rogue technology is the real concern.

Now, to be clear, I am not saying that the big tech corporations that track our shopping, messaging, and browsing habits are also behind the box office blockbusters that pit humans against machines in existential battles (in order to reinforce the notion that machines are the villains in our lives and misdirect our focus from who really has the control in the situation). That concept strikes me as nothing more than a really amusing conspiracy theory, though a fun one at that and one that could even make for a really cool movie. But the best conspiracy theories play on our fears, so it’s still worth unpacking our AI-related perceptions to understand what’s behind them.

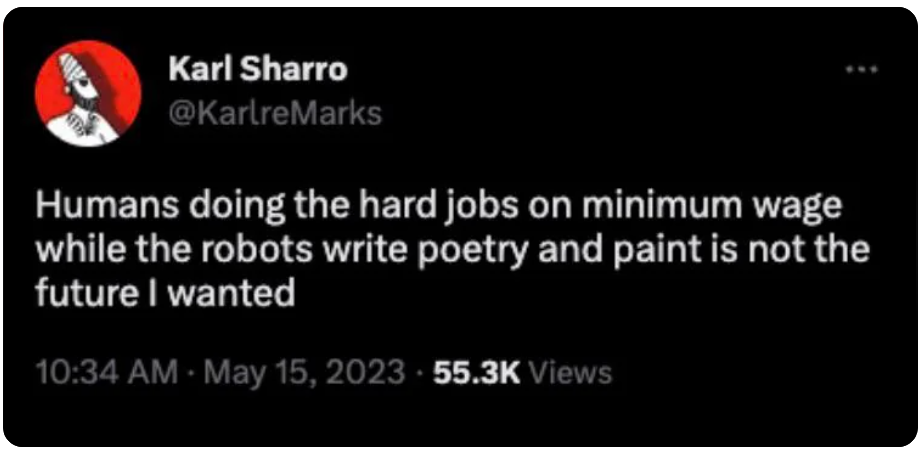

Image credit: [2]

Apart from AI becoming self-aware, surpassing human capabilities, and enslaving or eradicating the human race, which we’ve established won’t be happening any time soon, some noted fears about AI range from weaponization of technology (by humans against other humans, whether at the corporate or government level) to job elimination (by human-run companies to enact cost savings measures that ultimately reduce employment opportunities for humans). [3] There are even instances when AI tools operate in a seemingly biased way, as has been the case with facial recognition [4] and crime prediction software, [5] but in these cases, AI still only does exactly what it has been told to do – by its human developers, who have their own subconscious biases. By digging (not even deeply) into any of these examples, we see that AI is a tool, not the antagonist itself.

What We Gain and What We Lose

A tool is not inherently good or bad; what matters is how it is used (a hammer, for example, can be used to assemble or disassemble something). When we think of AI as nothing more than a tool, it reminds us that we have responsibilities in how we use it and that it also has limitations in what it’s capable of doing. By relying on said tool too much (as I described in last week’s post [6]) our brains begin to function differently: we spend more time absorbing information and less time contemplating it. From the previously-referenced Atlantic article, “as we come to rely on computers to mediate our understanding of the world, it is our own intelligence that flattens into artificial intelligence.” [7]

Humans have an incredible capacity for wisdom and discernment (not just intelligence), for curiosity and creativity (not just research capacity), but those things need to be exercised if we want to maintain them. And (speaking as someone who has barely done any physical exercise all year) that takes work to keep it up. The more passive we become in absorbing what is presented to us without taking our own steps to examine it, practice healthy skepticism, make connections to other things, or create something inspired by it, the less capable we become in utilizing our unique human attributes. (I feel myself doing it sometimes with these blog posts: simply assembling information from different sources instead of finding an insight and adding some unique value.)

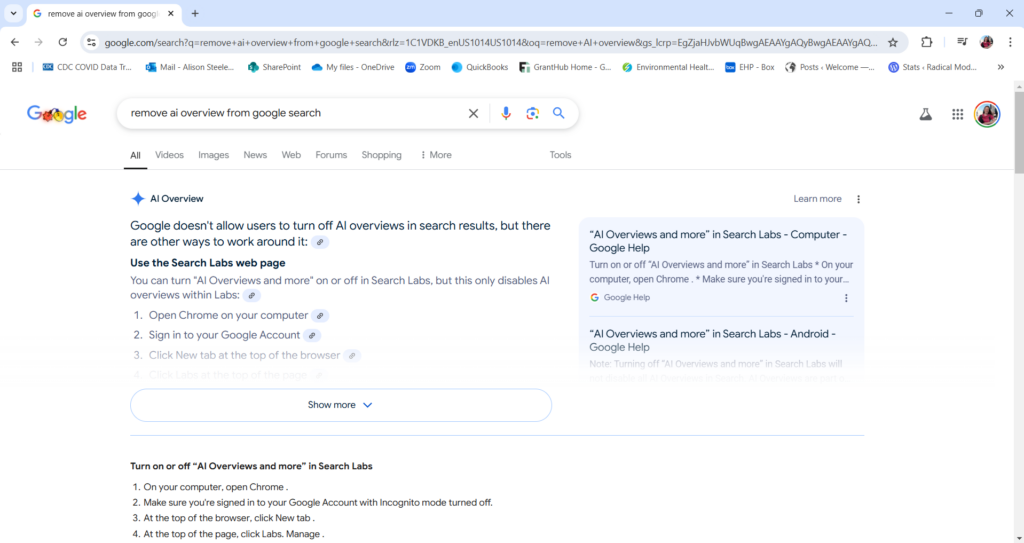

Image credit: [8]

In order to fully grow into our human potential, we need down-time to contemplate, to express ourselves, and to give back (not just take in). Of course, it’s easier and more comfortable not to do those things, and there are some entities that benefit greatly from us not doing them (and even encourage us not to do them). At a time when we spend so much of our lives online, it is our attention that has become commoditized, and we are complicit in making ourselves better targets; the more our brains are bombarded by shorter pieces of information coming at us from different directions, the more our brains adapt to and come to expect those types of interactions. In the meantime, we become more reactive and less independent.

Pushing Back

Speaking as a former business student, I can attest to the fact that it is difficult to sell things to people who are fully self-actualized. The entire field of marketing is built upon filling a need that someone has (or believes they have): playing to the fear of missing out, being inadequate, falling behind. Slow, contemplative, creative thought in members of the general public is likely bad for your business if you’re in social or news media. I’ve written about the dangers of big data behind social media before, [9] but again, it’s important to remember that the companies behind these tools have a goal, be it money, power, influence, etc. What happens as a result is a power differential not between humans and machines but between humans using machines and humans being used by machines.

And it’s not just overt marketing designed to make us purchase things: it’s our attention – our time and mental capacity, arguably among the most valuable things we have – which we give freely. As I pull up new YouTube channels that can play hours of background music, I know it’s far cheaper to have an AI generate that content than it would be to have a human do it. I remember the first wave of free platforms that could generate your likeness in the style of a specific artist, as well as the corresponding adage warning us that “if something is free, you’re the product.”

Image credit: [10]

So what I’ve been doing as a result, in trying to limit my availability as a product to companies, political parties, and other entities that want my unquestioning, passive attention, is to spend more time exercising my unique human capacity for thought in the following ways:

- Limiting my time on social media – the technology has been improving in its ability to hack our brains, and our brains are getting more compliant. There are resources like the “break up with your phone” challenge [11] and full scale digital detoxes (like the one I did last year [12]) if you don’t know where to start.

- Being skeptical of the content I consume – we now live in a world where anything we see might be fabricated, and if we want it to be true, we’re far more likely to believe it is. Resources like the Media Bias Chart [13] can help you consider the source of the information you hear, and there are tips for spotting AI-generated deepfake images, which are now everywhere. [14]

- Spending time using my brain – just as we can allow our critical thinking skills to atrophy, we can build them back up. I’m trying to do more book reading when I can to grow my attention span, more meditation to calm down my brain so it’s not always looking for a distraction, and I have added a Chrome extension to block the new AI Summary function in my Google results, something I was starting to rely upon too heavily for my liking.

- Doing art for the sake of art – whether constructing a Halloween costume, a well-written blog post, or a garden patch, I love the act of creation and creativity. Not all artists do their art for money, but some do, so I try to support my artist friends and avoid “free” AI-generated art. YouTube now requires AI-generated content to be labeled as such (it’s the honor system, but better than nothing), [15] so I give my views there to humans whenever I can.

There will be plenty more to come on this rapidly evolving topic in the future, but for now I thank you for spending some of your precious time and attention here, reading these human-generated words, and sharing any human-generated reactions you have below.

Thank you!

[1] https://www.denofgeek.com/movies/ian-holm-ash-scariest-monster-in-alien/

[2] https://arcsandsparks.wordpress.com/2012/05/30/art-in-the-final-frontier/

[3] https://www.useloops.com/blog/why-do-we-have-a-fear-of-ai

[4] https://sites.harvard.edu/sitn/2020/10/24/racial-discrimination-in-face-recognition-technology/

[5] https://www.propublica.org/article/machine-bias-risk-assessments-in-criminal-sentencing

[6] https://radicalmoderate.online/man-vs-machine-the-human-condition/

[7] https://www.theatlantic.com/magazine/archive/2008/07/is-google-making-us-stupid/306868/

[8] https://www.reddit.com/r/antiwork/comments/13i54n2/the_future_none_of_us_wanted/?rdt=55727

[9] https://radicalmoderate.online/november-2020-elections-part-2/

[11] https://catherineprice.com/phone-break-up-challenge

[12] https://radicalmoderate.online/digital-detox-ground-rules/

[13] https://adfontesmedia.com/interactive-media-bias-chart/

[14] https://www.npr.org/2023/06/07/1180768459/how-to-identify-ai-generated-deepfake-images

[15] https://www.cnn.com/2024/03/18/tech/youtube-ai-label-for-creators/index.html

0 Comments