Part 1

I am always taking suggestions for new blog topics, and my friends tend to deliver, whether they know it or not. For as little time as I’ve been spending on social media of late, a few seemingly disparate posts (related to technology, societal control, and dystopian future scenarios) have stuck in my brain and made me think about our relationship with the “thinking machines” we’ve created and rely on as part of our daily lives. I am a self-proclaimed luddite but also a passionate fan of science fiction, and somewhere in between those two labels is a person trying to learn more about present-day science fact.

Behind the Narrative

The “man vs. machine” conflict in popular culture shows up in some of my favorite universes: Battlestar Galactica (in which the Cylons rebel against their creators), [1] the foundational lore of the Dune series (in which thinking machines are banned), [2] and Star Trek (in which many episodes of The Next Generation dealt with ethical use of technology). [3] Speculative fiction author Hannah Yang notes that this conflict goes hand-in-hand with other common conflicts, such as “man vs. society” and “man vs. self,” because we created the technology with which we’re at odds – so really, if we’re talking about being destroyed by our own creations, it’s still an instance of us destroying ourselves. [4]

Image credit: [6]

A very dear friend and literary scholar taught me always to consider speaker, audience, and motive in all situations – especially in advertising (a term I am using broadly here to include things like political propaganda). There is a reason we fixate on these “man vs. machine” stories: whether it’s machines rebelling against their creators (Terminator [7]) or machines wanting fair treatment (AI: Artificial Intelligence [8]), the core of the tension lies in the grey area of whether humans believe machines to be tools or sentient beings. Humans’ attitudes and actions toward machines, in these cases, tend to become cautionary tales against human hubris.

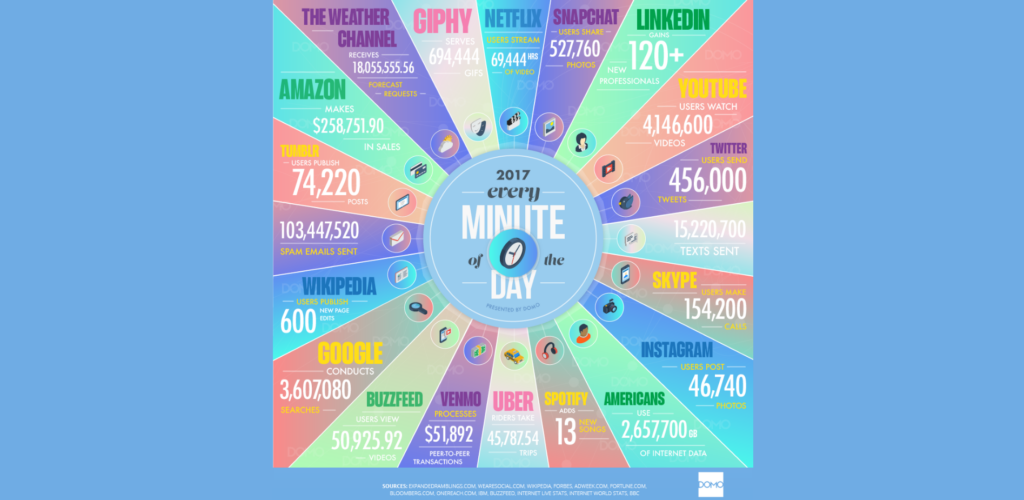

It makes sense that we would be focused on these narratives at a time when artificial intelligence and our reliance on it are growing at astounding rates. As of four years ago, there were estimated to be 64 zettabytes of data in existence, or 64,000,000,000,000,000,000,000 bytes, equivalent to the amount of information that could be held by 33 million human brains. [9] If that number alone weren’t frightening enough, an estimate from McKinsey states that every two years the volume of data across the world doubles in size. [10] With the increasing hours we spend online every day, much of that data includes usage statistics for social media and streaming platforms, not to mention our shopping habits and communications. [11]

Image credit: [12]

My first question when I had to Google how many zeroes were in a zettabyte was “do we need all this information?” (Note: the fact that I had to look it up is now a data point somewhere.) Then I quickly put on my MBA hat and considered that business decisions at the world’s biggest companies are made by analyzing massive stores of data – but not by humans. The human brain cannot deal with the sheer scale of information available, which is why if we’re going to use it, we need artificial intelligence to step in. If you’re reading this post, you likely use AI every single day, even if you don’t think of it as such.

Fiction vs. Fact

Indeed, most of us who don’t work in tech probably can’t adequately describe what AI can and cannot do. When my data scientist at work suggested making use of machine learning algorithms to analyze the data we collect, I sat back, raised my eyebrows, and only half-jokingly noted my fear of Skynet. The corner of his mouth twitched slightly upward, and he patiently explained that what he was proposing had no risk of becoming self-aware and launching nuclear weapons. While I believe him, I still have concerns about our continuing and expanding reliance on technology as a society… which is why it seemed like a good idea to take this opportunity to outline what AI currently is (and isn’t).

Image adapted from this source: [13]

IBM’s website breaks down the three different categories of Artificial Intelligence in a straightforward way with real-world examples that even I could understand: [14]

- Artificial Narrow Intelligence (the only type that exists today) “can be trained to perform a single or narrow task, often far faster and better than a human mind can. However, it can’t perform outside of its defined task.” Within the scope of Narrow AI are two specific applications:

- Reactive Machine AI, which has no memory and uses only available data. Since it can quickly analyze vast amounts of information, the output is seemingly intelligent. Examples include Deep Blue (which beat Garry Kasparov at chess [15]) and Netflix recommendations.

- Limited Memory AI, which can recall past events and specific outcomes over time. It can decide on a course of action to achieve a desired outcome, and it can improve as it’s trained on more data. Examples include Siri, Alexa, ChatGPT, and self-driving cars.

- Artificial General Intelligence (a.k.a. Strong AI) “can use previous learnings and skills to accomplish new tasks in a different context without the need for human beings to train the underlying models. The ability allows AGI to learn and perform any intellectual task that a human being can.” It is only a theoretical concept today, but specific applications are in development:

- Theory of Mind AI would be able to understand the thoughts and emotions of other entities and respond appropriately in a simulated way without experiencing those emotions itself. Emotion AI, a type of Theory of Mind AI, is currently in development.

- Artificial Superintelligence “would think, reason, learn, make judgments and possess cognitive abilities that surpass those of human beings. [It] will have evolved beyond the point of understanding human sentiments and experiences to feel emotions, have needs and possess beliefs and desires of [its] own.”

- Self-Aware AI and other kinds of Super AI as described here are strictly theoretical.

There are already AI functions that perform discrete tasks better than humans can – those are tools, and we use them as such. Even those that can learn cannot take their lessons from one task and apply them to another on their own – they need a human to do that. What I take from all of this research on a subject that is, admittedly, very new to me is that, while the concept of artificial intelligence is scary, it is not (in and of itself) dangerous because it is still fundamentally a tool. While AI can crunch massive amounts of data to answer questions, it is still up to humans to identify the critical questions that need to be asked.

But therein lies the rub: is our increasing interaction with AI limiting our capacity for critical thought? Maybe we need to be less worried about machines acquiring human skills and more worried about humans losing the things that make us uniquely human. And that’s where we’ll pick up next week.

Image credit: [16]

What is your favorite man vs. machine story, and what do you like about it? Please share in the comments – and thanks for reading!

[1] https://www.imdb.com/title/tt0407362/

[2] https://en.wikipedia.org/wiki/Dune_(franchise)#The_Butlerian_Jihad

[3] https://www.imdb.com/title/tt0708807/?ref_=ttep_ep9

[4] https://prowritingaid.com/man-vs-technology

[7] https://www.imdb.com/title/tt0088247/

[8] https://www.imdb.com/title/tt0212720/

[9] https://rivery.io/blog/big-data-statistics-how-much-data-is-there-in-the-world/

[11] https://www.domo.com/learn/infographic/data-never-sleeps-5

[13] https://www.domo.com/learn/infographic/data-never-sleeps-5

[14] https://www.ibm.com/think/topics/artificial-intelligence-types

[15] https://www.chess.com/article/view/deep-blue-kasparov-chess

[16] https://simonc.me.uk/tv-review-battlestar-galactica-f5db9f734bf9

0 Comments